Journal On Camera Latency Measure

#Measuring Camera Latency: A Personal Journal

Last week I was experimenting WebXR with webcam, it raised a interesting question for me: how good is the camera latency? After spending another weekend on the measurement I bring this blog, an incomplete journal on Camera Latency Measuring.

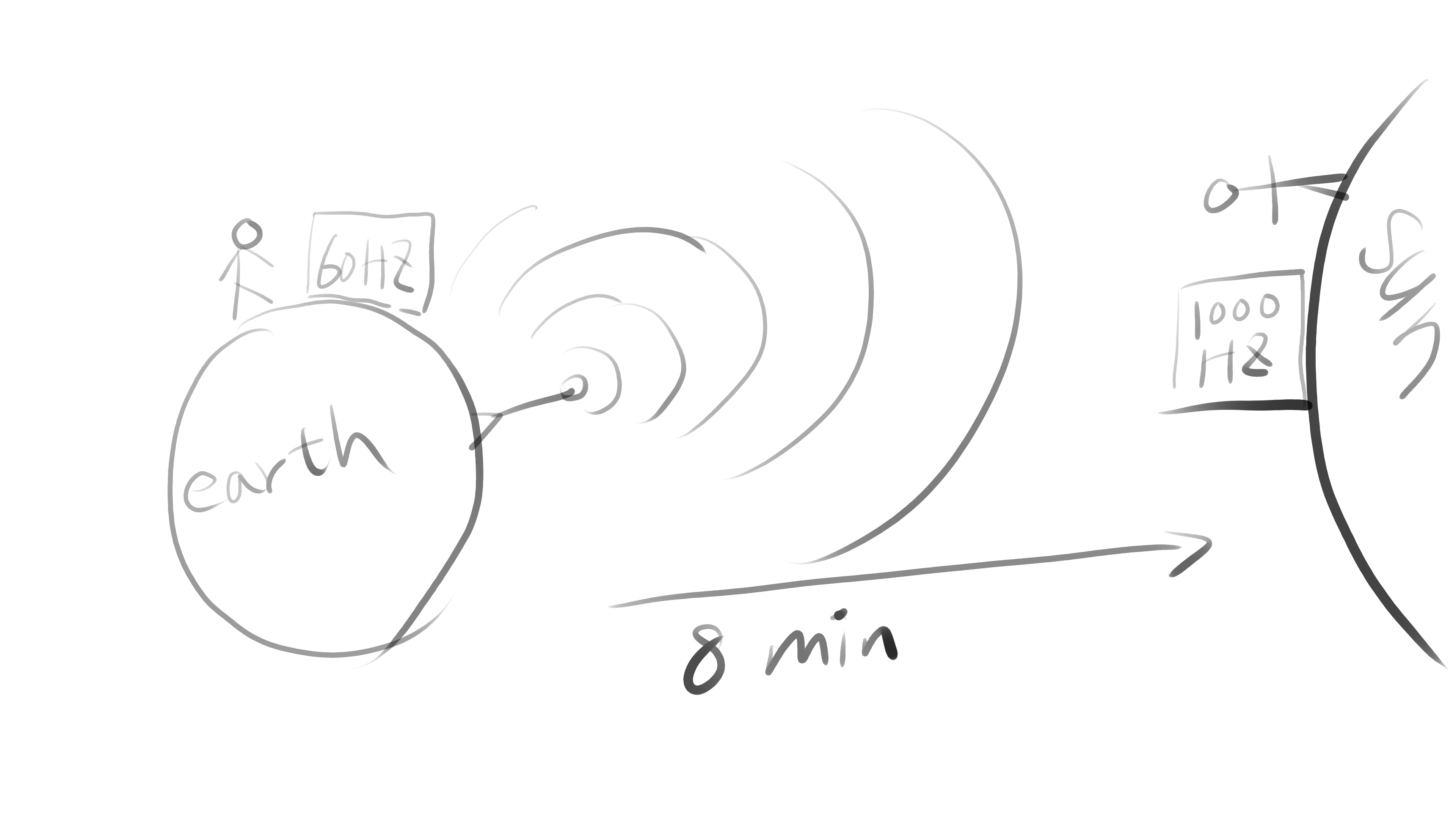

When talking about ‘Latency’, it’s easily being mixed up with ‘Frequency’. For example slogan of gaming monitor mention ‘High Refresh Rate’ brings you ‘Low latency’. Let’s consider a real-time football broadcast, your friend watches it on an 60HZ TV on earth, while you’re watching on 1000HZ TV on the sun, then your eyes feels much smoother motion than your friend, while your latency is still worse since signal takes 8 minutes to arrive at 1000HZ TV.

This example indicates that frequency only determine how small the time delta between two signal, and Latency means how long it takes the signal from real-world to the destination. It’s also the reason TV having ‘gaming’ mode to turn off time consuming image enhancing algorithm. Refresh rate stay the same, but latency from digital input to physical output (photon) will be lower. My goal is to measure the time that spend from camera shutter complete read the image, up to such image arrived into our application’s memory. Before building up my own setup, I firstly tried a script from github

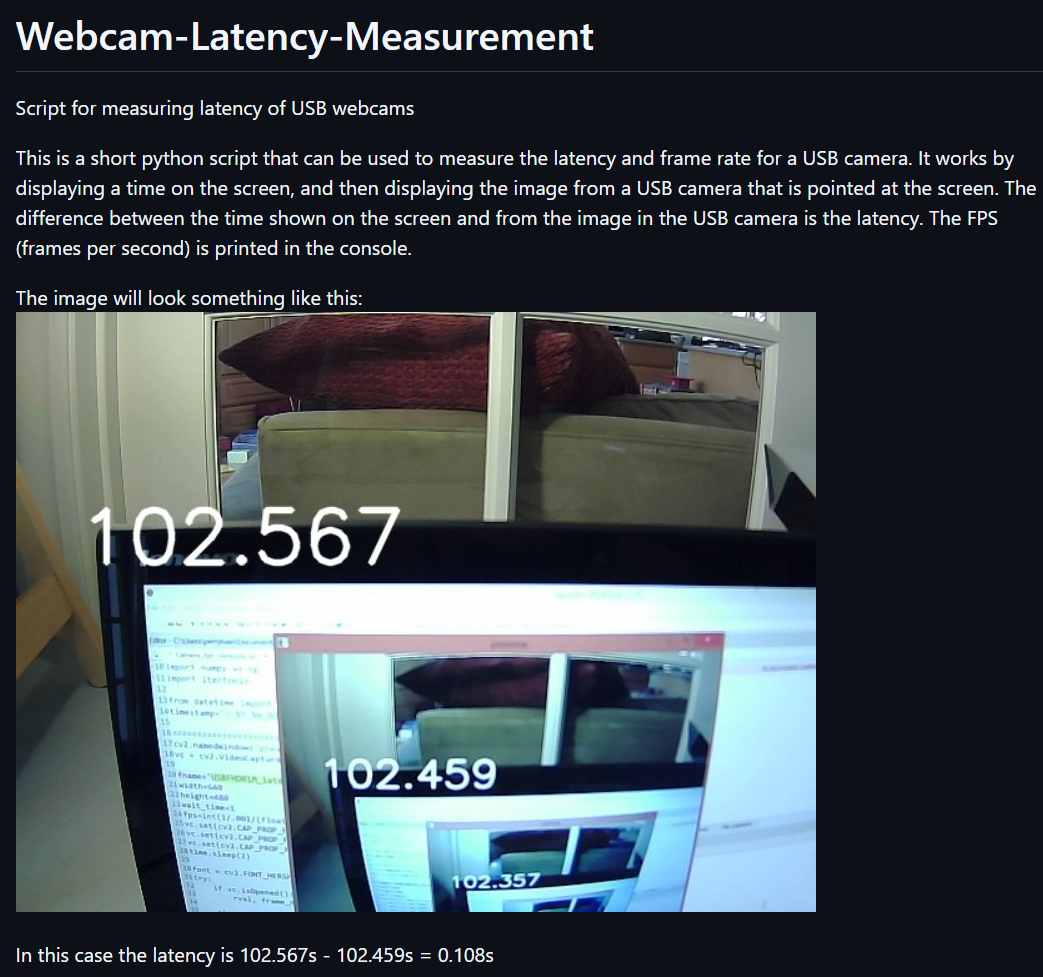

the logic is straightforward:

while read camera image

draw timestamp on camera image

show this image on screen

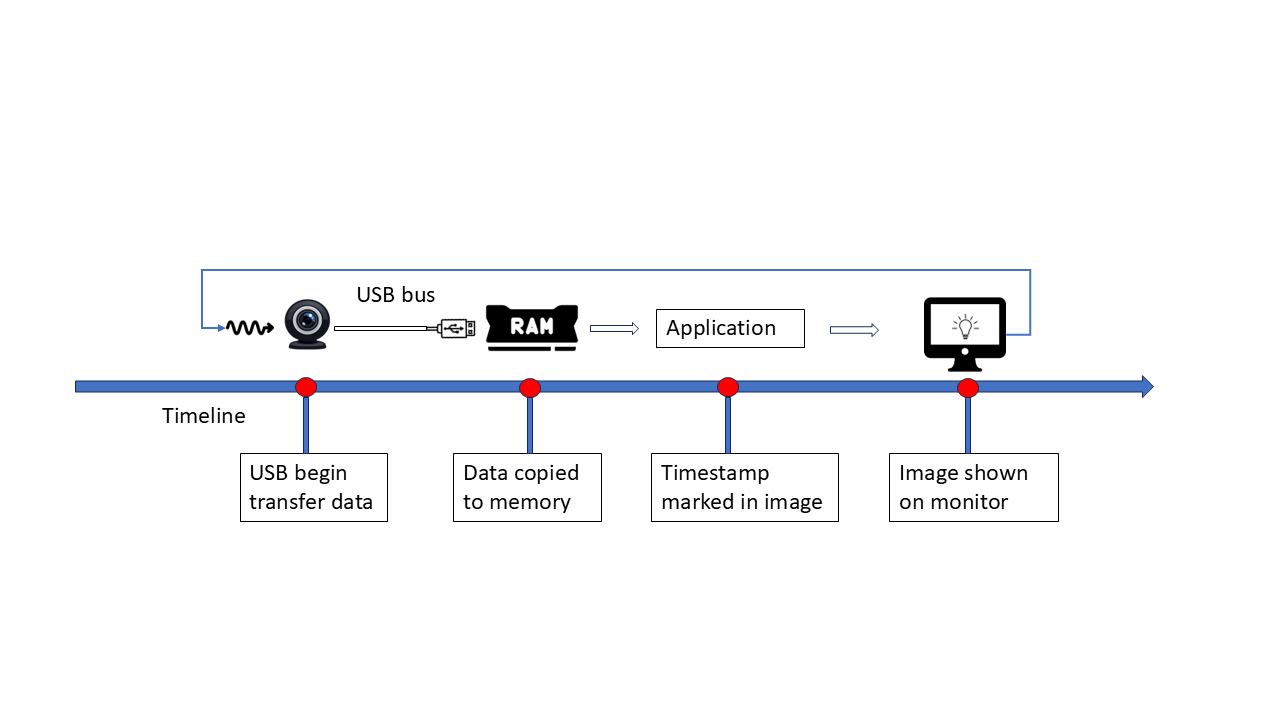

when I tried this script with my webcam (30FPS), I got 32 milliseconds and 36 milliseconds, and what interested me is the real-time FPS output, it shows 27.x FPS or 31.x FPS seems… Perfectly match the latency since 32 x 31 ~ 1000 and 36 x 28 ~ 1000, is this by accident? Let’s drawing a diagram to see how different blocks connected together:

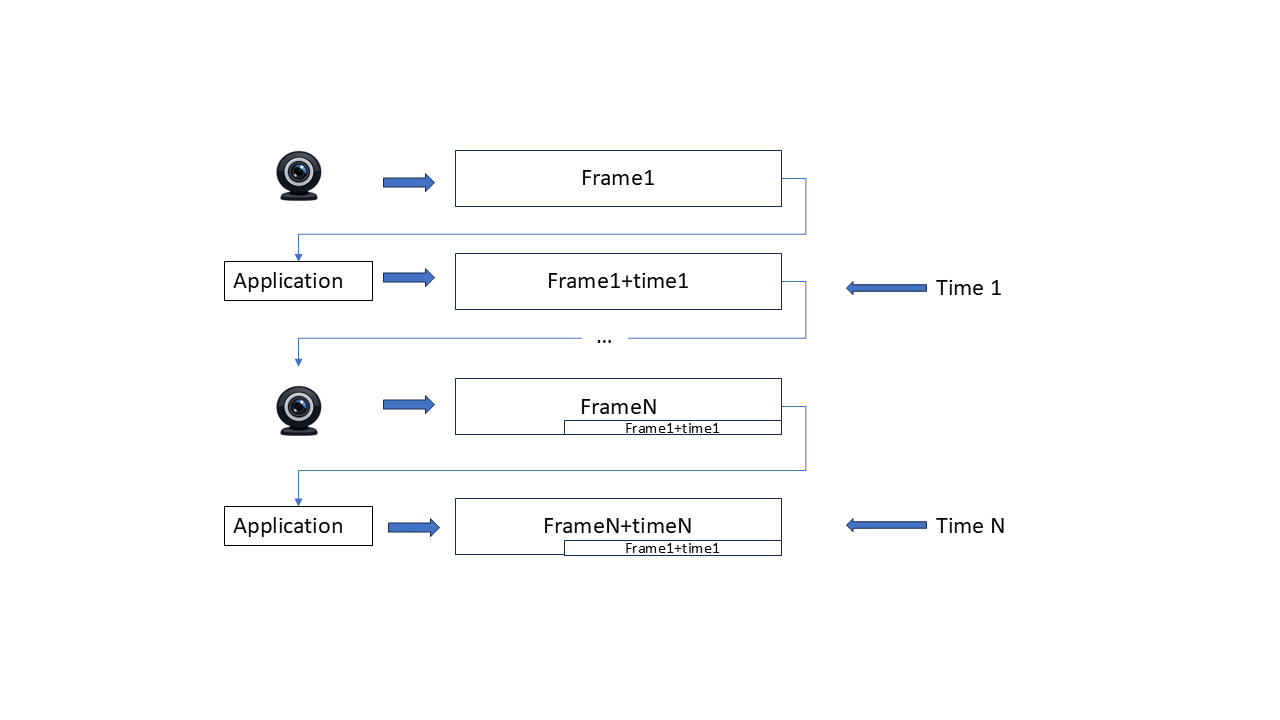

Let’s draw in it in another way, it should give you better understanding:

The two timestamps appeared on one image, is always the timestamp we mark in the loop, when we calculate the delta between these two timestamp, of course it’s just the time in-between two capture time, has nothing to do with the transfer time! The issue is that the timestamp used as start is bounded to camera frequency, when camera latency is lower than that interval, start timestamp is already out-of-date (since no camera update during that period), the measured latency precision also bounded to the interval.

Inspired by that script, we should decouple the startup timestamp mark frequency from camera frequency, also refresh as fast as possible. My monitor worked at 165HZ, much higher than webcam (30), should be good enough for this task.

First Try:

Using console text print, it works like this:

Result: failed, flushing too fast, camera can’t catch clear text at all

Second Try:

Spread the timestamp text along whole line, so individual timestamp will stay stable for a while. Result: kind of worked

This approach also have other issues:

- flushing doesn’t aligned to V-sync, captured time is not most accurate

- App run under GUI virtual terminal, which might have it’s internal buffering lead extra latency

- GUI output controlled by window manager, hard to reason about buffer queue.

- Manually look into every image required, not automatic

Firstly I tried to put Linux running under VGA/SVGA mode (to get ride of KMS/DRM stack), but after some rounds searching with chatgpt/google, I found such functionality already implemented by graphics card (instead of monitor), and on UEFI this is replaced by GOP, No support for high refresh rate other than 60HZ is also a major problem. Then I looked into KMS/DRM information, it already support what I need

- complete frame buffer control, no window system/manager in charge.

- atomic flip, allow perfect match to V-sync

- support OpenGL(ES) painting, GPU still take the heavy lifting

And then I find a library SRM which is a thin wrapper of KMS/DRM allow you quickly start OpenGL(ES) drawing. This time I use QRcode to display timestamp information on screen, so automatic post-processing can be applied. Similar to the text painting, the painting also arrange QrCode at different area of screen and stay for a while, with this trick, even monitor is flushing at 165 HZ, one QRCode can stay for more than 1/165 second, the more you arrange, the longer it stay, 30 FPS camera can also have enough time to capture stable image. Without this, a QRCode may already show on screen and then disappeared without camera notice. DRM/KMS also provides notify callback when flip is finished, allow us to record accurate timing information.

for KMS/DRM opengl painting (with SRM), it works like this way:

- you receive page flipped notification, knows that previous frame buffer already being flipped, record timing information there.

- paintGL callback will only trigger exactly once before next frame flip, you can draw anything for next frame, then using srmConnectorRepaint to swap it, it will be used for next available flip

- since there’s still no way to measure the time spend on page flip itself (after we sending flip to kernel, up to the monitor start sending photon for that contents). The best we can do is to use the page flipped (of previous time) timestamp, and assume time that contents start displaying on monitor shouldn’t be too late(actually this doesn’t matter too much, as we can see later, our measure is a closed loop by an unique clock source so that part of time also being considered).

I start with SRM examples, to recroding the pageFlipped timing, got this plot:

FPS caluclated from this timing is 165.0786808021504

the monitor information reported by xrandr

2560x1440 (0x1c2) 645.000MHz +HSync -VSync *current +preferred

h: width 2560 start 2568 end 2600 total 2640 skew 0 clock 244.32KHz

v: height 1440 start 1446 end 1454 total 1480 clock 165.08Hz

FPS calculated fro mthis information should be 645000000 / 2640 / 1480 ~ 165.07985 FPS differences < 1e-5, should be good enough.

Then create a QrCode painting logic with OpenGL(ES), with help of chatgpt/google. But testing/debugging on KMS/DRM application is quite unfriendly because your code takes control of whole frame buffer (so ctrl+alt+FN switching won’t work), so I wrote a simple glut entry for glue, then testing everything under normal x11 environment. Once it’s done, switching to SRM will be painless. Then I use my phone’s 240FPS slow motion to verify such setup actually work (gif play already slow down):

by comparing the frame timestamp (using it’s FPS) to the timestamp on every frame, we know if that timestamp is accurate or not.

ffprobe -v error -select_streams v:0 -show_entries stream=avg_frame_rate,r_frame_rate -of default=noprint_wrappers=1 slowmo_clock_boottime.MOV

r_frame_rate=240/1

avg_frame_rate=154080/641 ~ 240.37 FPS

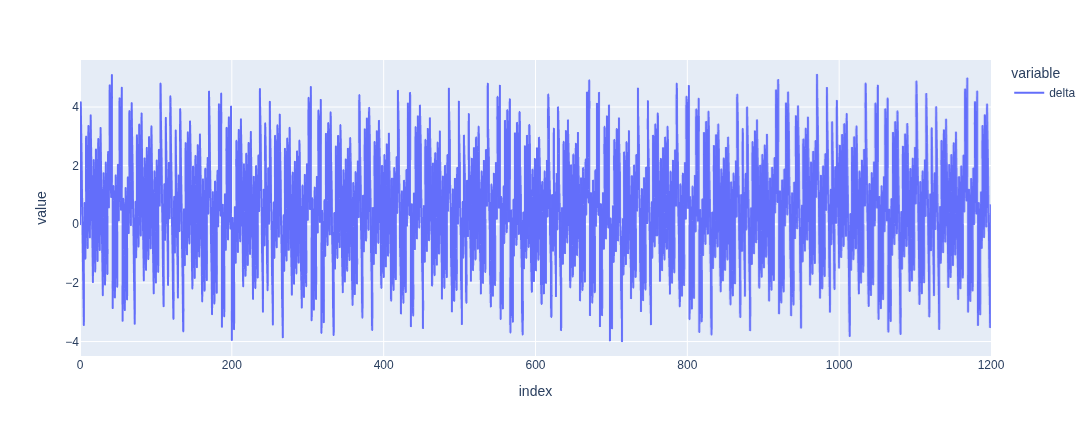

the trick here is to use avg_frame_rate (actual file frame rate) instead of r_frame_rate. Consumer grade slow motion recording usually deployed variable frame rate, 240 is not always the exactly value. then compare the delta between slowmo frame timestamp and the latest QrCode timestamp on that frame (by aligning first slowmo frame time to first frame latest Qrcode timestamp). we got following result:

,

,

Here we see the jitter between two timestamp, since monitor refresh timing doesn’t aligned to slowmo capture timing, it’s possible that for one monitor fresh, the phone just capture latest content, but for another fresh, that timestamp already shown for 6 ms and being captured by phone

combined with the slow motion video (no more than one new image appear at same time), now I’am confident to say the KMS/DRM Qrcode display code is working as expected. Now using my webcam for similar analysis:

but before testing , first verify v4l2 information, my webcame support variable frame rate, when it detects not so much motion in scene, it automatically reduce the frame rate, 30 FPS setup might gives back 15 fps streaming (and if you wave hands in front of it, you find it restored to 30 FPS) which will be annoying for testing.

v4l2-ctl --all

...

exposure_dynamic_framerate 0x009a0903 (bool) : default=0 value=0

# turn it off by

v4l2-ctl -d /dev/video0 -c exposure_dynamic_framerate=0

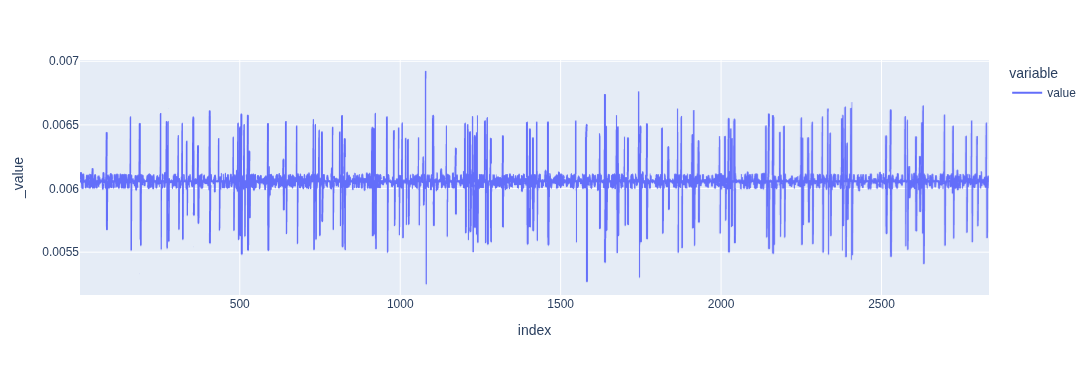

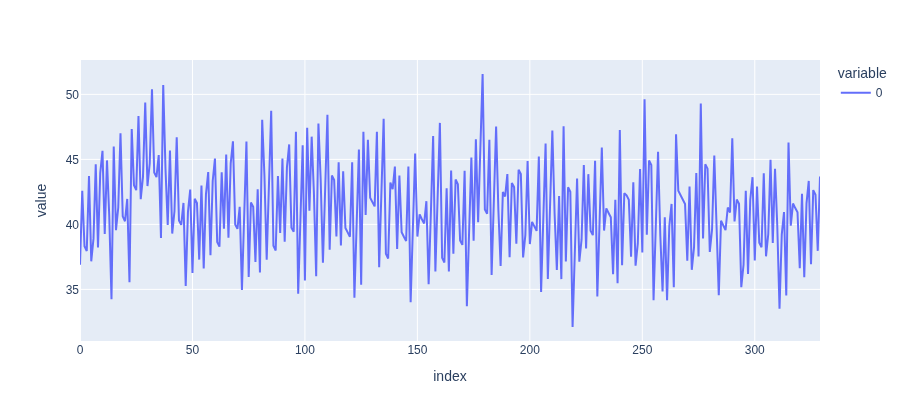

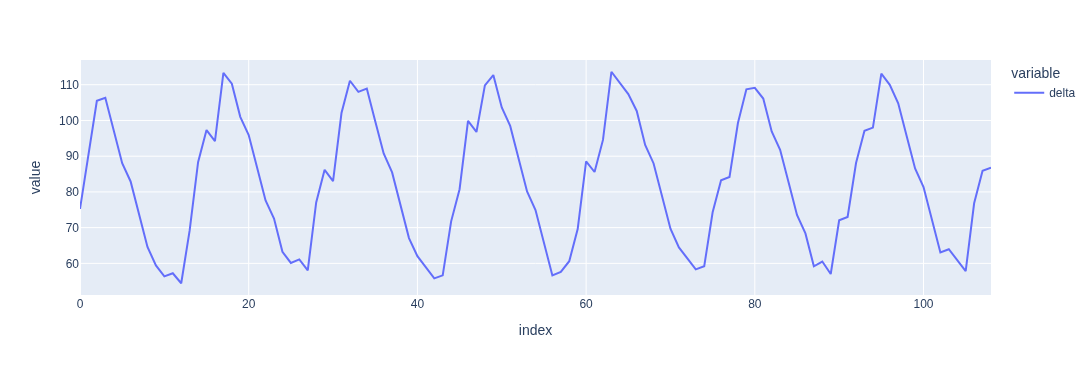

Then I use my webcam to capture the qrcode screen, calculate the duration from latest qrcode timestamp of frame, to the timestamp of that frame being captured, I got following plot:

,

,

this plot makes more sense, since the monitor and webcam are working at different frequency, the latency (by using timestamp shown on monitor) should have jitter just like slowmo-timestamp plot, instead of a fixed value. The best case latency is 32ms, Note, we’re using previous frame page flipped callback time as the QrCode, and mark the received back time of the image,

It is a closed loop, all possible latency already considered (including the time spend on signal travel the cable and became photon from monitor) so this is the “worst case of” best case value. “best case of” best case latency can be 32ms - 1/165.07868 sec ~ 26ms, (if the duration starts when page flipped up to next frame being on display is exactly 1 monitor frame time) and due to monitor flush rate not aligned to webcam shutter, it’s possible that shutter just missed the new monitor contents, then that contents being captured by next shutter, which is the worst case (51.5 ms). In theory the worst case latency should be equal to best case latency + 1 webcam frame time (33ms) here we got 19.5 ms, because the camera shutter is not instant, it needs to work earlier before next frame transfer. the average latency (worst case) is 41.1853.

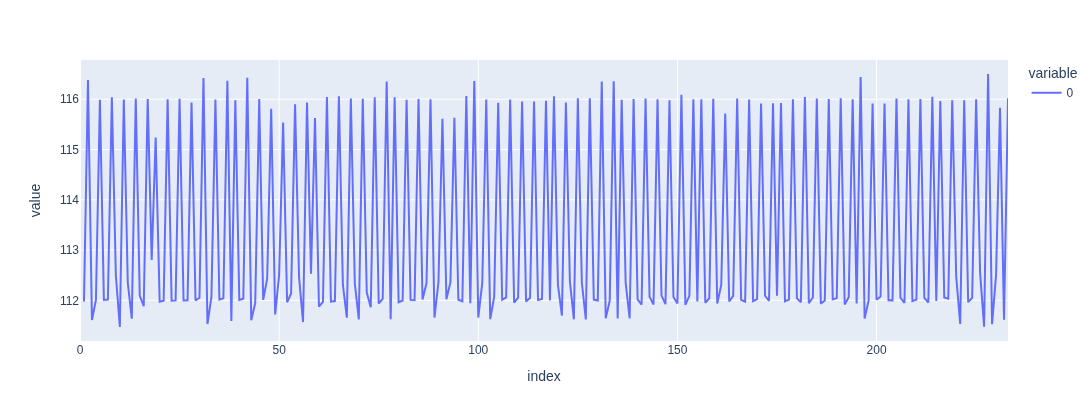

Now let’s verify the latency for low FPS setup. By forcing the FPS to 10, we can observe the differences between my measure and original script. we can predict that original script bound the update frequency to camera frequency, so it won’t gives latency that lower than 1/10 sec. I also made some changes to that script, painting qrcode instead of text timestamp, also recording the elapsed time since last time received image. Firstly let’s see the time spend between two capture

,

,

the fps is a little slow than 10, 8.9 FPS most of time, and if we compare this data to the delta of two timestamp on image (by qrcode), we will see

capture delta , qrcode delta

0.1120000000000001, 0.112,

0.11600000000000055, 0.116,

0.1120000000000001, 0.112,

0.11199999999999921, 0.112,

0.11600000000000055, 0.116,

...

0.11200000000000188, 0.112,

0.11199999999999832, 0.112,

0.11599999999999966, 0.116,

0.11200000000000188, 0.112,

0.11199999999999832, 0.112,

0.11600000000000321, 0.116,

0.11199999999999832, 0.112,

0.11199999999999832, 0.112

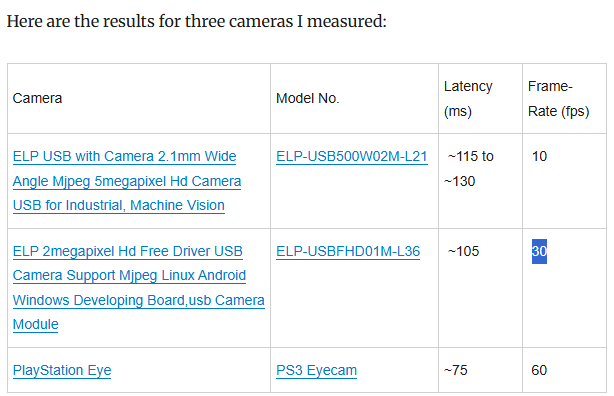

this clearly shows that the ‘latency’ original script measure, is essential the delta between two capture, not the time signal travel to application. It’s easy understandable if we consider a camera that only takes 1 picture every 1 hour, due to the text drawn on image only update once per hour, the latency measured by original script is always an hour. the time signal traveling to our application is much faster, but dominated by 1 hour interval time. So Original script result is only meaningful when transfer latency is much longer than one interval period. (it also link to a table of some camera tested result, shows that the latency are always greater than one interval)

since I run original script with 30FPS setup and always got exactly 1 interval delta, it prove that the latency should be always less than one interval. Note, this conclusion doesn’t conflict with previous 51.5ms worst case result (compared to 33ms interval), the latency actually came from three parts:

- the time spend on signal travel over usb to our application memory, which is the fixed part

- the time camera wait from previous shutter complete, up to next shutter starts, which is FPS dependent

- the time when latest timestamp is flushing on screen. For 165HZ monitor, even we flush at every V-sync, one fixed timestamp will stay on screen for about 6 milliseconds, which also add latency, just like the time differences we use 240 slowmo to capture 165 monitor image.

for reason 2 and 3, we can still have worst case latency that longer than one interval, but the captured timestamp delta perfectly matching interval, it can prove that before camera capture next frame, the exactly previous frame already being captured and shown on display, no in-between frames pending in queue, (otherwise two timestamps in one image must have delta >= 2*interval , not exactly equals to 1 interval)

now let’s measure it with my approach, this time it’s more tricky, since the camera doesn’t work the exactly way at different FPS, for 30 FPS streaming, even camera can’t capture every monitor update, every frame still shows the correct pattern of image: clearest image always appear at bottom right (because we update qrcode top-bottom, left-right) but 10FPS is like this:

,

,

no clear image pattern. looks like all qrcode appear/disappear at same time, lead lots of empty image. my suspection is the shutter time too long, the short appear time image signal will be ‘smooth’ out. so I increase the number of qrcode grid from 3x3 to 4x4, also increasing the stay time of every qrcode, then the capture like this

,

,

now I have better result

,

,

average latency 82.9 ms, best case 53 ms, worst case 113 ms

How Nvidia measure input E2E latency? it’s called (LDAT)[https://developer.nvidia.com/nvidia-latency-display-analysis-tool], a photon sensor attached in front of monitor, and registered as mouse. It measure the elapsed time after emulated mouse click up to corresponding flush on monitor, This Digital Foundry Video shows how it works:

https://www.youtube.com/watch?v=TuVAMvbFCW4 at 3:21